FAQs#

Common Issues#

Invalid Permissions#

Insufficient privileges can prevent a project from successfully performing file operations at runtime. This issue is often related to the application’s permission level, so it is necessary to ensure that the program has the required permissions to write to any specified directories for successful task completion.

These errors can be accompanied by a 0x80004005 code.

Solution: Right-click on the program’s shortcut and select “Run as administrator.”

Data File Errors#

Outdated or missing data files can cause various error messages when using the On-Premise processing mode in the Contact Verify component. The underlying binaries expect all data files to be present in the designated directory, and the component will not function properly if there are any discrepancies.

Resolving Data File Issues#

How do I resolve issues with data files?#

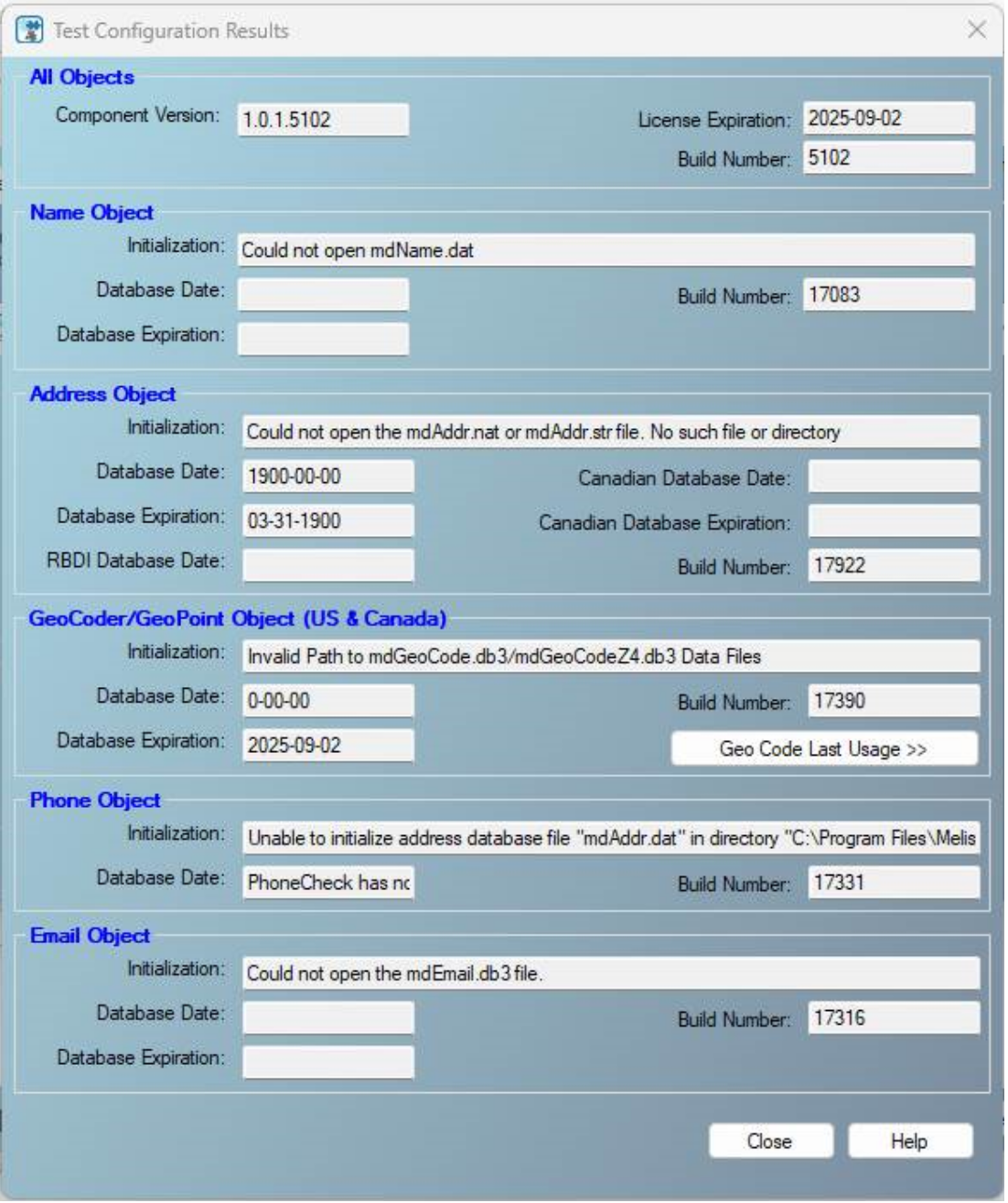

(Note: The error you encounter might differ from the examples shown here.)

Cause: Various issues can occur with data files for the Contact Verify Component, such as an incorrect path to the data files path or outdated/missing data files.

Solution: Follow these steps to ensure the configuration is correct:

Step 1: Check for Required Data Files: Ensure you have all the necessary data files for each on-premise object. The required files can be found here:

Step 2: Verify Data File Path Configuration: Confirm the Data File Path points to the correct location. By default, this path is C:\Program Files\Melissa DATA\DQT\Data.

Step 3: Update Outdated Data Files: If the data files exist and the Data File Path is configured correctly but errors persist, your data files might be outdated. Consider the following actions:

Option 1: Run the Full On-Premise SSIS Installer

SSIS builds are released quarterly. You should receive emails from the sales rep with download links for the latest installer. Running this will update the data files.

If you aren’t receiving these emails, please contact your Sales Rep or our Tech Support.

Option 2: Download Data Files Manually

Use Melissa’s Releases page to download the latest data files using your License String. Links for each object:

Name Object -

https://releases.melissadata.net/browse?MFT=dq_name_data&RELEASE=latest&ID={YourLicense}Address Object -

https://releases.melissadata.net/browse?MFT=dq_addr_data&RELEASE=latest&ID={YourLicense}GeoCoder/GeoPoint Object -

https://releases.melissadata.net/browse?MFT=geocoder_data&RELEASE=latest&ID={YourLicense}Phone Object -

https://releases.melissadata.net/browse?MFT=dq_phone_data&RELEASE=latest&ID={YourLicense}Email Object -

https://releases.melissadata.net/browse?MFT=dq_email_data&RELEASE=latest&ID={YourLicense}

Option 3: Download Data Files Automatically

Use the Melissa Updater, which checks for missing/outdated files and automatically downloads and replaces them.

Memory Allocation Errors#

The size of the buffers allocated for the data flow will significantly impact the performance of an SSIS project. Larger buffers allow more data to be loaded into memory at once, while smaller buffers help manage memory consumption. Both configurations can enhance speed and functionality, so finding the optimal buffer size for a specific system is crucial.

Messages relating to memory errors may resemble:

“A buffer failed while allocating…”

“The Data Flow task failed to create a buffer to call PrimeOutput…”

Solution: There are three properties that can be used to modify the data flow’s buffers and mediate memory allocation issues.

DefaultBufferMaxRows- This represents the maximum number of rows a buffer can hold before being processed and moved to the next component in the data flow.DefaultBufferSize- This value sets the maximum number of bytes in a buffer.AutoAdjustBufferSize- When set to True, this will dynamically adjust buffer size at runtime based on the system’s available memory and the amount of data being processed.

Missing Components#

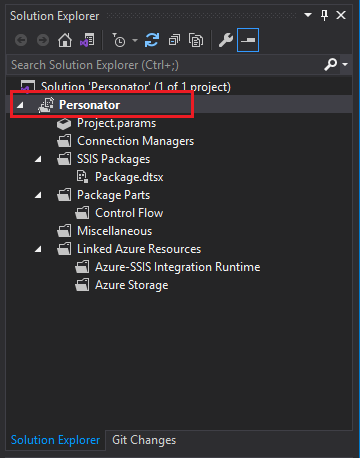

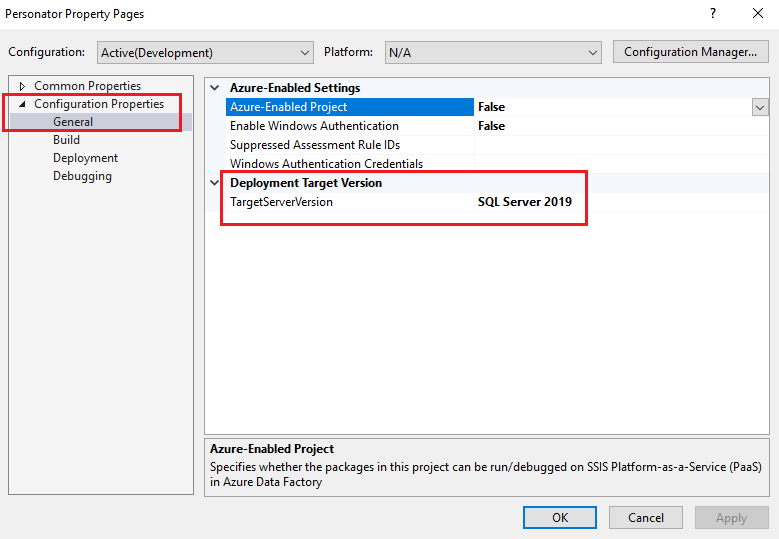

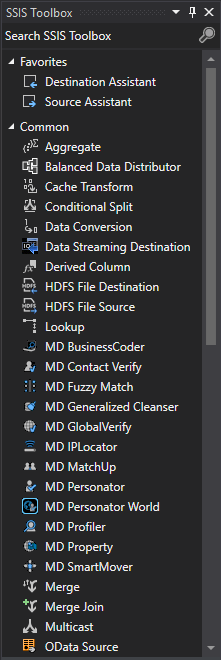

If Melissa’s components do not appear in the SSIS Toolbox after a successful installation, there may be a mismatch between the Target Server Version configured in SSIS and the version of SQL Server used in the target environment on the system.

SSIS Setting Target Server Version Option#

In Visual Studio, verify that the Target Server Version option is set to the corresponding SQL Server instance on the machine.

To set the Target Server Version, in Visual Studio’s Solution Explorer, right click on the SSIS project Node and click Properties

Select Configuration Properties. You will see the Target Server Version; select the desired version of SQL Server.

Once set, the project will reload. Double click the .dtsx package in the Solution Explorer menu and our SSIS components should appear in the SSIS Toolbox.

Public Key Token Errors#

A mismatch between an SSIS project’s Public Key Token and the target SQL Server version can cause errors related to the Public Key Token, which may prevent the successful execution of the project.

Messages related to Public Key Token errors may resemble:

“Public Key Token could not be loaded.”

“Could not load file or assembly…”

SSIS How to Update Public Key Token#

In order to update your existing projects to a newer version of SQL Server, you will need to edit the PublicKeyToken in the code designer page.

Before starting this process we recommend obtaining a list of all the components that utilize PublicKeyToken in the OLD projects.

Updating the PublicKeyToken in Existing Projects

Warning

Please back up the original project file before starting.

Locate the

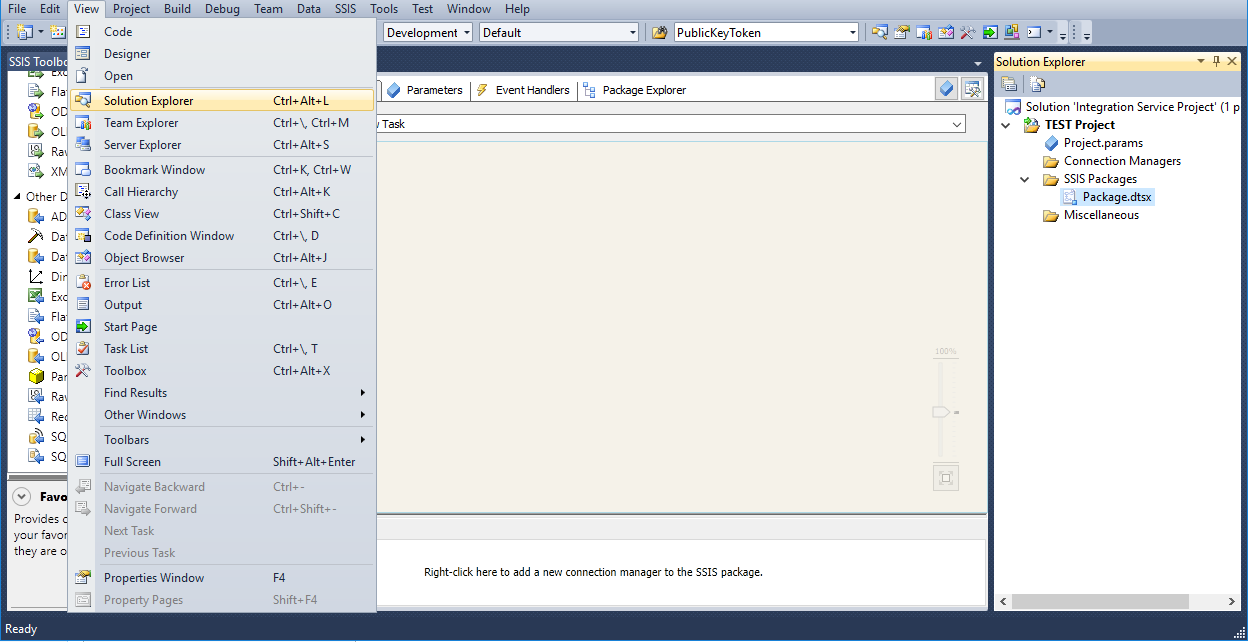

Package.dtsxby going to View > Solution Explorer.

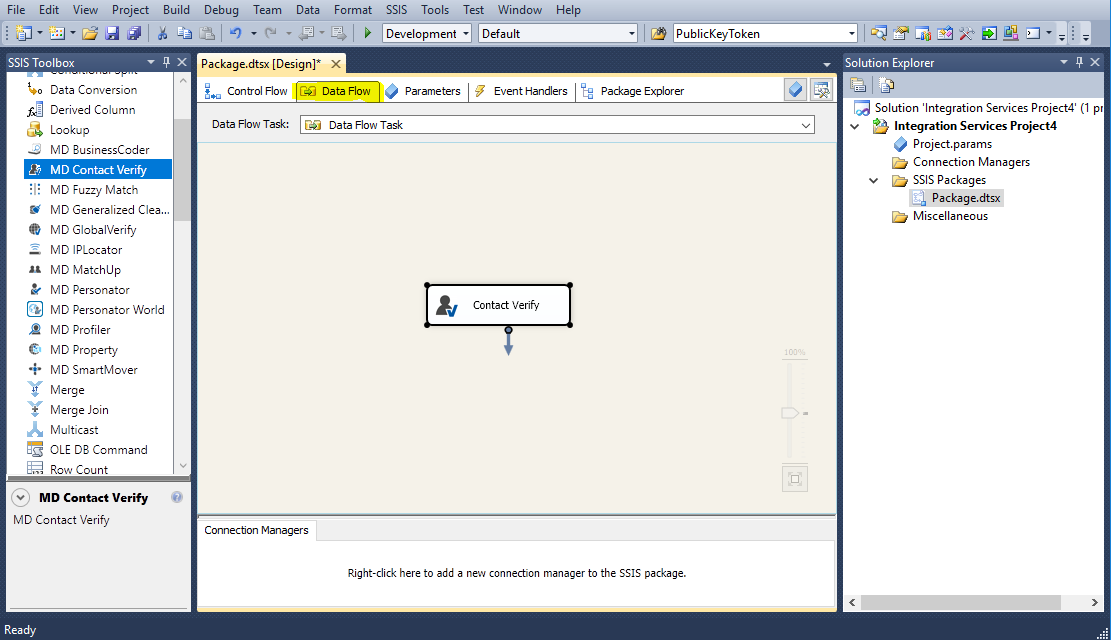

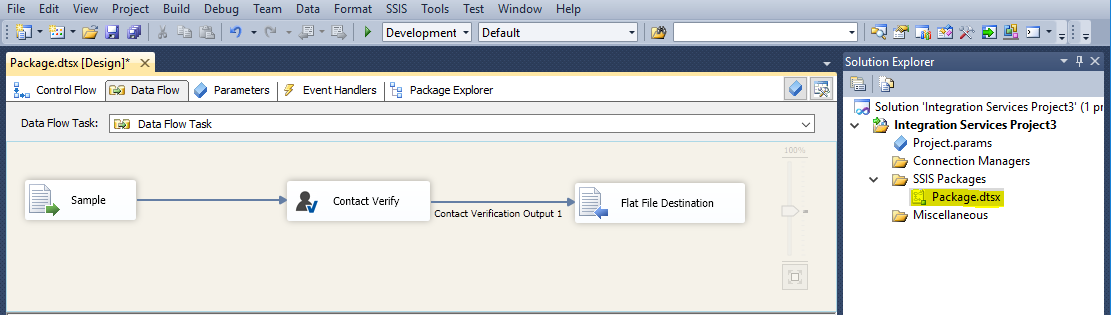

Go to the Data Flow tab and drag a NEW component from the SSIS Toolbox in order to get the NEW PublicKeyToken.

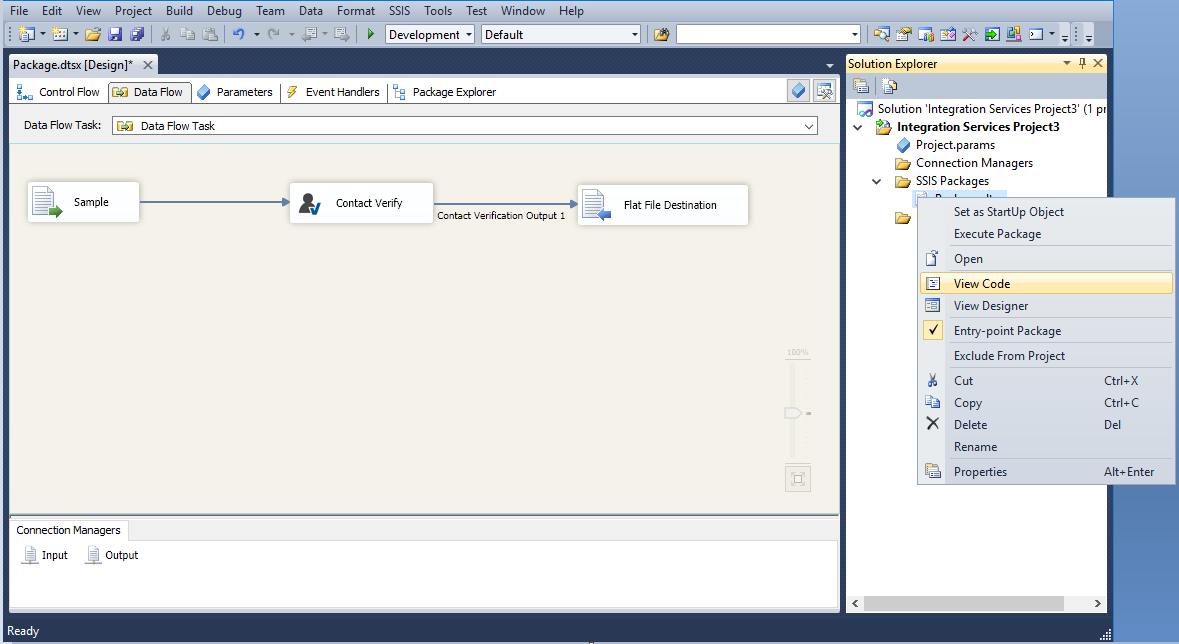

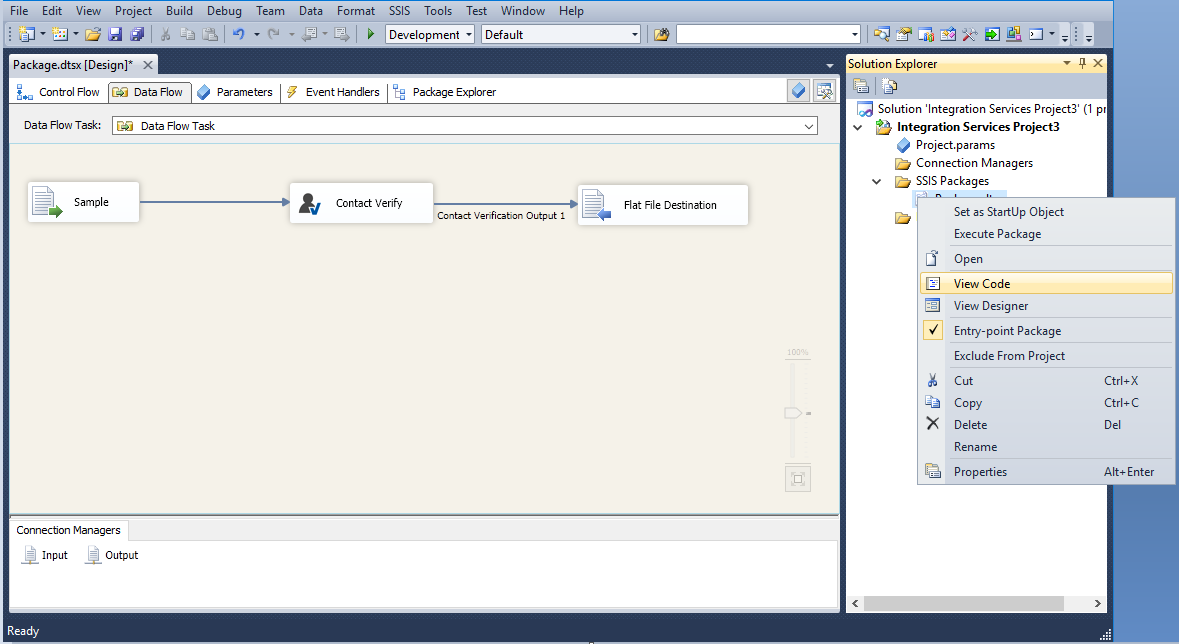

Right click the

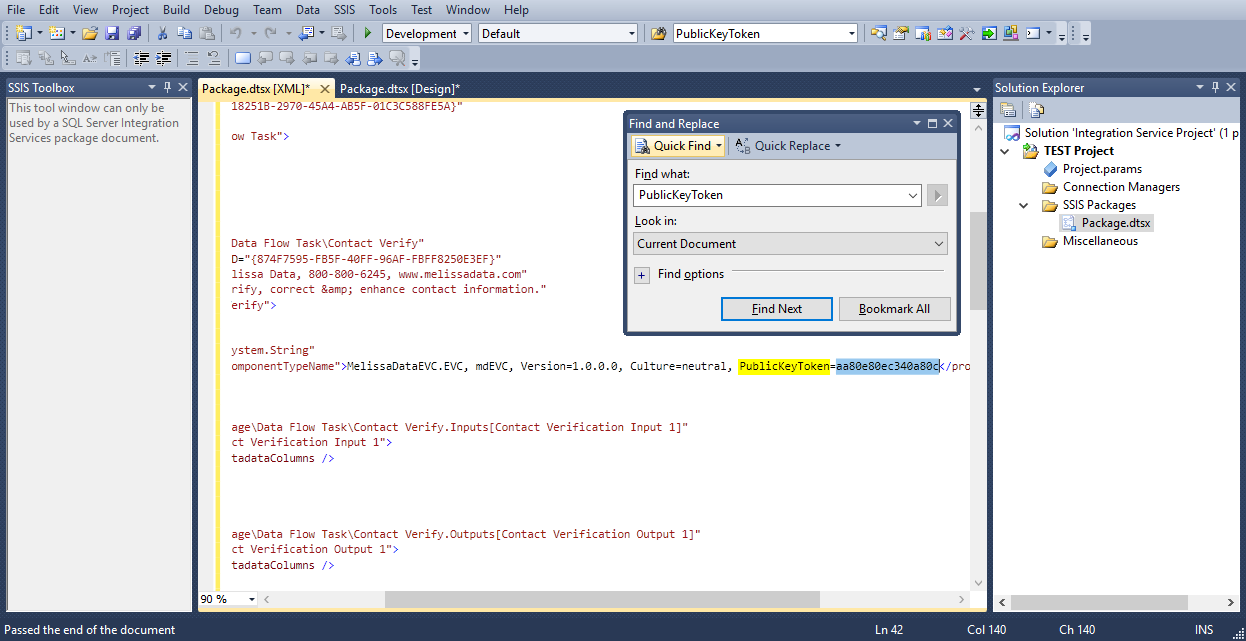

Package.dtsxon the right side in the Solution Explorer and click View Code (or alternatively hit F7). The following window should come up:

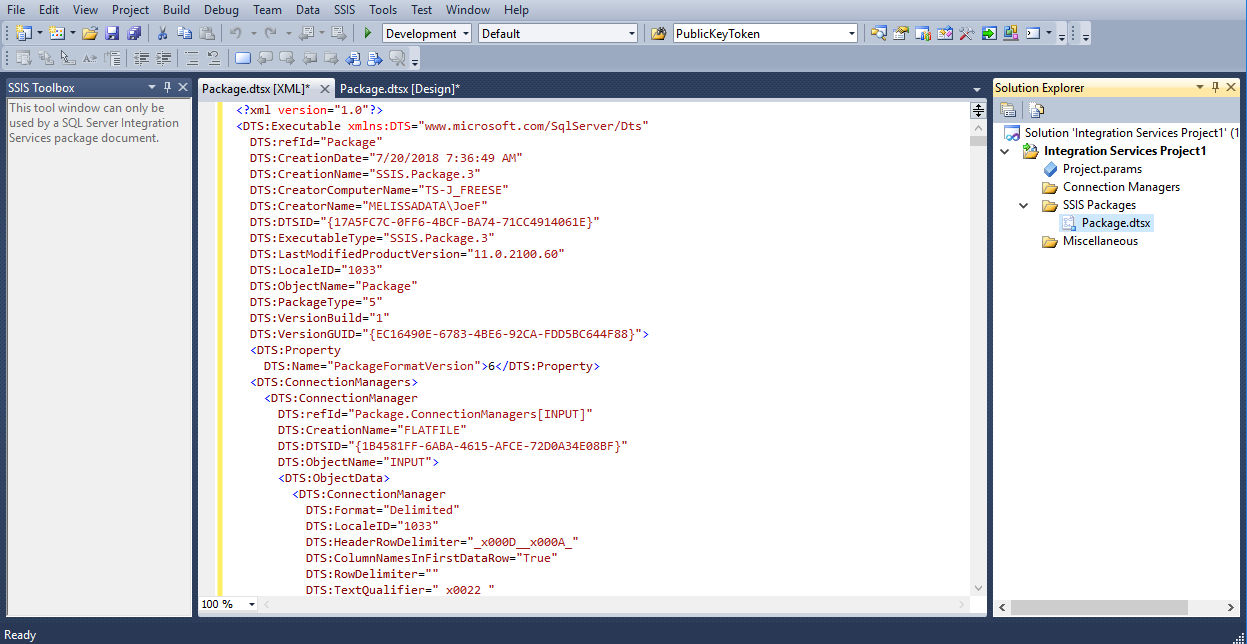

Press

CTRL+Fto open the Find and Replace window. TypePublicKeyTokenin the Find what: field and click Find Next to locate and copy the NEW token value to wordpad. Save this token, it will be used to update the older projects experiencing the issue. (Ex. PublicKeyToken=aa80e80ec340a80c)

Open the old project to be updated in Visual Studio.

Right click

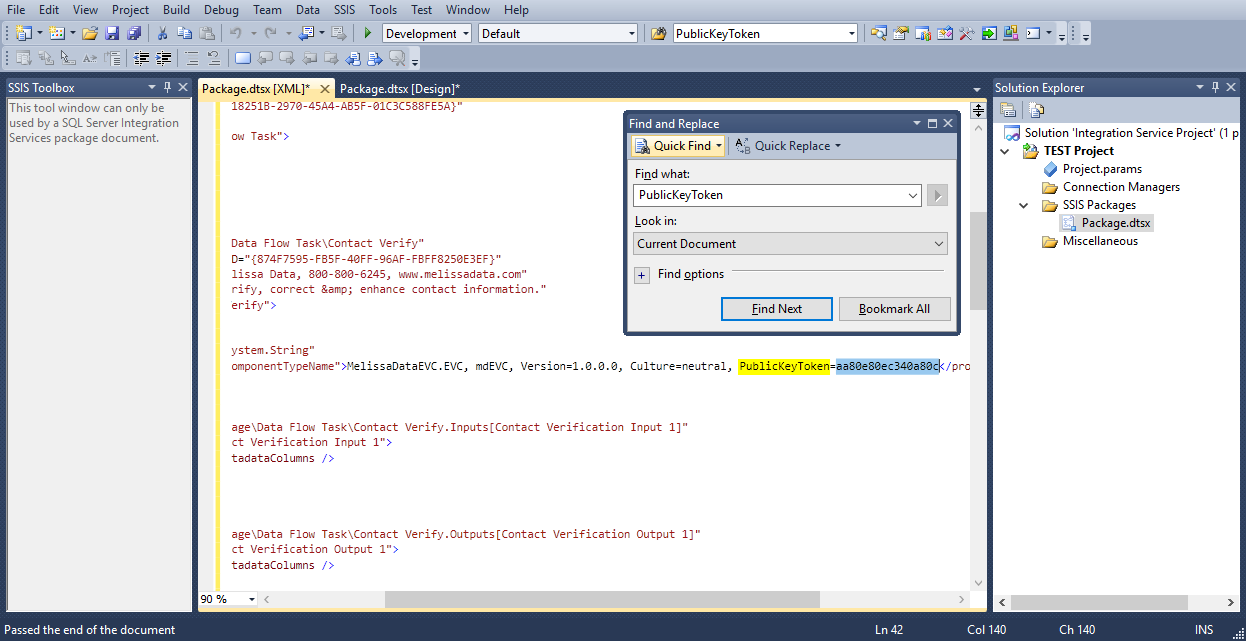

Package.dtsxon the right side under the Solution Explorer and click View Code.

Use

Ctrl+Fto search for PublicKeyToken in the project to be updated.

Once it is found, paste the NEW PublicKeyToken that was collected in step 4, in place of the existing one. Save the project. Now the PublicKeyToken has been updated. (Save, close, and then reopen.)

Data Quality Components for SSIS#

What is affected during an install or upgrade?#

By default the data is stored at this path: C:\Program Files\Melissa DATA\DQT\Data

Sample Projects is located

C:\Program Files\Melissa DATA\DQT\DQS\Samples

Copy of Component DLLS:

C:\Program Files\Melissa DATA\DQT\DQS\SSIS_2005

C:\Program Files\Melissa DATA\DQT\DQS\SSIS_2008

C:\Program Files\Melissa DATA\DQT\DQS\SSIS_2012

C:\Program Files\Melissa DATA\DQT\DQS\SSIS_2014

Object DLLS:

C:\Program Files\Melissa DATA\DQT\DQS\32_bit

C:\Program Files\Melissa DATA\DQT\DQS\64_bit

Copy of Config Files:

C:\Program Files\Melissa DATA\DQT\DQS\Config

Affected During Update or Install:

Component Pipeline Dlls:

C:\Program Files\Microsoft SQL Server\110\DTS\Binn

C:\Program Files (x86)\Microsoft SQL Server\110\DTS\Binn

(Note That 110 refers to SQL SERVER 2012, please look for your corresponding version)

Active Config Used by SSIS:

C:\ProgramData\Melissa DATA

MatchUp Data Files and Configurations:

C:\ProgramData\Melissa DATA\MatchUP

(mdMatchup.mc stores your matchcode and will not be overwritten if one exists, you can copy this to a new install to transfer matchcodes.)

How come I can’t process less than 100 records for SmartMover NCOA?#

USPS regulations state we cannot process NCOA for files less than 100 records. In adherence to the new regulations, SmartMover now checks the first batch to see if it contains less than 100 records. If the batch is less than 100 records we will reject the batch and return an error message. SmartMover will interrupt the processing after processing begins. Please contact your sales representative if you have any questions or concerns regarding this change.

Can we save current settings for DQC for future?#

Yes, Melissa SSIS includes the feature that you can save currents settings for future use. Under File Tab you can save current setting in a .cfg file in local your drive. Under same File tab click “Open” and upload the .cfg file.

Speed Facts for CVC?#

EVC is an enterprise component so performance of EVC is highly dependent on machine configuration. Operating System, RAM, Processor or using network drive for data input or output, make significant impact on performance of EVC. In addition performance also depends upon 2 more main factors:

How you are using EVC?#

It is highly recommended for EVC users, select the only those features i.e. LACSLink(Address Add-on) DNS Lookup(Email, Add-on), which are required. Unnecessary use of add-on and feature will effect the performance.

Type of Data?#

If the input data is really corrupted or having lot of errors, will effect the performance. We have additional 2 different scenarios for using EVC, which will help you to analyse the throughput of the component in different situations.

Profiler Speed?#

Different analysis features directly affect component performance. Options with data aggregation and sort analysis have a high impact on speed of the component. For a list of speed tests, see Profiler FAQ Service Speed.

How do I make Excel Source Drivers work?#

The version of the OLE Drivers must match your Office edition (32bit vs 64bit) in your project properties. If you have 32bit Office, click Project then Properties. Under configuration properties, click “debugging” and set “Run64BitRunTime” to False. If you have 64bit Office then set “Run64BitRunTime” to True.

In which format Data Quality Components(DQC) accept input?#

You can use any format as input for Melissa SSIS components. It can be .csv, .xls, .dbf, xlsx, SQL database etc. In some cases you might not see any column in drop down options of MD SSIS component, in this case you can use “Data Conversion”(in-built component in BIDS) to change the data type of particular missing column to “DT_WSTR”.

How we can update individual Melissa Object rather than updating whole CVC?#

EVC component installs all Melissa Objects in “Binn” folder under DTS folder of SQL Server. If you are using EVC in SQL Server 2008 and need to update only Address Object grab latest mdAddr.dll and replace older mdAddr.dll in following location:

C:\Program Files\Microsoft SQL Server\100\DTS\PipelineComponents

Same way you can update other Melissa Objects i.e for Email Object replace mdEmail.dll, for GeoCoder mdGeo.dll, etc.

If you are installed EVC on SQL Server 2005, the location of Melissa Object will be in following location:

C:\Program Files\Microsoft SQL Server\90\DTS\PipelineComponents

Microsoft Fuzzy Lookup vs. The Data Matching and Cleansing Component?#

The Microsoft transforms are only available with SQL Server enterprise edition which is a significant cost in itself if your organization doesn’t have it already.

All of the following transforms are SQL Server Enterprise edition only:

Data Mining Training Destination

Data Mining Query Component

Fuzzy Grouping

Fuzzy Lookup

Term Extraction

Term Lookup

Dimension Processing Destination

Partition Processing Destination

The MD Fuzzy Matching Component allows more granular control over matching whereas the SSIS native fuzzy matching does not allow any control of its algorithms or access to multiple algorithms. There are only two configurable parameters with the stock Fuzzy lookup and grouping components ie: similarity threshold and maximum number of results per input record.

The MD Fuzzy Matching component has 16 configurable matching algorithms some of them developed specifically by us such as the unique Keyboard distance for misspellings. All industry standard matching algorithms are supported, and probabilistic match settings can be configured for each column of data and the rules can be daisy chained for maximum catch all situations. Also the component has the ability to ignore common data quality issues when matching. ie; whitespaces, irregular characters, etc.

The Melissa Fuzzy Matching Component can also inherently cleanse your data through regular expressions. Regular expressions can be built and tested through an expression builder which is also built in to the component. Cleansing your data prior to matching is a crucial step in order to get the most accurate results for matching.

The component also is able to automatically split up the results into 3 destinations: Matches, Possible Matches and Non-Matches. Based off the percentage score between 2 records, the component will re-direct the results to the appropriate destination according to the percent thresholds you’ve set in the component. Also the Fuzzy SSIS component has full metadata (source and compare scores for multiple algorithms) for tracking record lineages.

One more thing to bear in mind is that, the stock Fuzzy Lookup component in SSIS requires that your reference table be a SQL Table. The Fuzzy Matching Component does not limit you to a SQL Table Reference Database.

The DMCC suite also comes with the MatchUP component, which is specially designed for the unique set of matching problems with Address, Name and Company data fields. MatchUp recognizes combinations, inverse names, Acronyms, Nick names, numerical streets such as 12th vs. twelfth, and so on.

Which Versions of SQL Server/Visual Studio do Your Components Support?#

Melissa currently supports Microsoft SQL Server 2016, 2017, 2019, and 2022.

SQL Server 2014 is supported until August 10th, 2026, after which it will be deprecated.

SQL Server 2012 and earlier versions are deprecated and no longer officially supported.

While components for deprecated SQL Server versions may still be included as part of the SSIS installation, deprecated platforms do not receive new features, updates, or full technical support.

Certain Microsoft SQL Server versions are officially supported by specific Microsoft Visual Studio versions for our components. BusinessCoder and future new components will only be offered in SSIS 2010 and newer.

Officially Supported by Melissa#

Microsoft SQL Server 2022 - Microsoft Visual Studio 2022

SQL Server Data Tools for Visual Studio 2022 - https://docs.microsoft.com/en-us/sql/ssdt/download-sql-server-data-tools-ssdt?view=sql-server-ver15

SQL Server Integration Services Projects - https://marketplace.visualstudio.com/items?itemName=SSIS.SqlServerIntegrationServicesProjects

Microsoft SQL Server 2019 - Microsoft Visual Studio 2019

SQL Server Data Tools for Visual Studio 2019 - https://docs.microsoft.com/en-us/sql/ssdt/download-sql-server-data-tools-ssdt?view=sql-server-ver15

SQL Server Integration Services Projects - https://marketplace.visualstudio.com/items?itemName=SSIS.SqlServerIntegrationServicesProjects

Can also be installed from Visual Studio 2019 Tools > Extension and Updates Section.

Microsoft SQL Server 2017 - Microsoft Visual Studio 2017

SQL Server Data Tools for Visual Studio 2017 - https://docs.microsoft.com/en-us/sql/ssdt/download-sql-server-data-tools-ssdt?view=sql-server-2017#ssdt-for-vs-2017-standalone-installer

Microsoft SQL Server 2016 - Enterprise Edition and RC2 - Microsoft Visual Studio 2015

SQL Server Data Tools for Visual Studio 2015 (14.0.61021.0) - https://msdn.microsoft.com/en-us/mt186501.aspx?f=255&MSPPError=-2147217396

Microsoft SQL Server 2014 - Microsoft Visual Studio 2013 - Deprecated 08/10/2026

Microsoft SQL Server 2012 - Microsoft Visual Studio 2010/2012 - Deprecated

Microsoft SQL Server 2010 - Microsoft Visual Studio 2008 - Deprecated

Microsoft SQL Server 2008 - Microsoft Visual Studio 2005 - Deprecated

Data Type Conversions#

Conversion chart of SSIS data types to SQL Server data types

SSIS Data Type |

SSIS Expression |

SQL Server |

|---|---|---|

single-byte signed integer |

(DT_I1) |

|

two-byte signed integer |

(DT_I2) |

smallint |

four-byte signed integer |

(DT_I4) |

int |

four-byte signed integer |

(DT_I4) |

int |

eight-byte signed integer |

(DT_I8) |

bigint |

eight-byte signed integer |

(DT_I8) |

bigint |

float |

(DT_R4) |

real |

double-precision float |

(DT_R8) |

float |

string |

(DT_STR, «length», «code_page») |

char, varchar |

Unicode text stream |

(DT_WSTR, «length») |

nchar, nvarchar, sql_variant, xml |

date |

(DT_DATE) |

date |

Boolean |

(DT_BOOL) |

bit |

numeric |

(DT_NUMERIC, «precision», «scale») |

decimal, numeric |

decimal |

(DT_DECIMAL, «scale») |

decimal |

currency |

(DT_CY) |

smallmoney, money |

unique identifier |

(DT_GUID) |

uniqueidentifier |

byte stream |

(DT_BYTES, «length») |

binary, varbinary, timestamp |

database date |

(DT_DBDATE) |

date |

database time |

(DT_DBTIME) |

|

database time with precision |

(DT_DBTIME2, «scale») |

time(p) |

database timestamp |

(DT_DBTIMESTAMP) |

datetime, smalldatetime |

database timestamp with precision |

(DT_DBTIMESTAMP2, «scale») |

datetime2 |

database timestamp with timezone |

(DT_DBTIMESTAMPOFFSET, «scale») |

datetimeoffset(p) |

file timestamp |

(DT_FILETIME) |

|

image |

(DT_IMAGE) |

image |

text stream |

(DT_TEXT, «code_page») |

text |

Unicode string |

(DT_NTEXT) |

ntext |

Component Specific FAQ & Optimizations#

SSIS Component |

FAQ |

|---|---|

Matchup |

Basics: Advanced: |

Contact Verify |

|

Personator |

|

Profiler |

|

Global Verify |

|

SmartMover |

|

IP Locator |

|

Property |

|

Business Coder |

SSIS Install Other Than C: Drive#

If you want to install your SQL Server to a path other than the default C: drive, follow this guide.

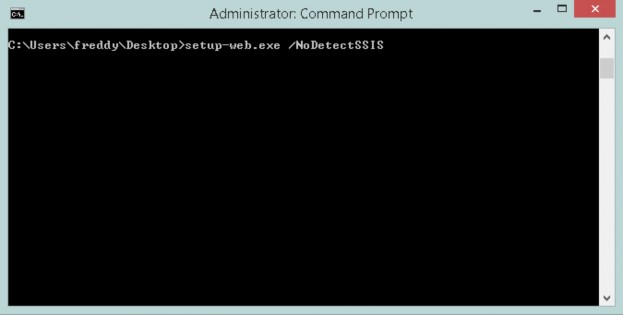

Open Command Prompt as Administrator.

cd to where the installer is located on your machine.

Type the installer name along with the command /NoDetectSSIS

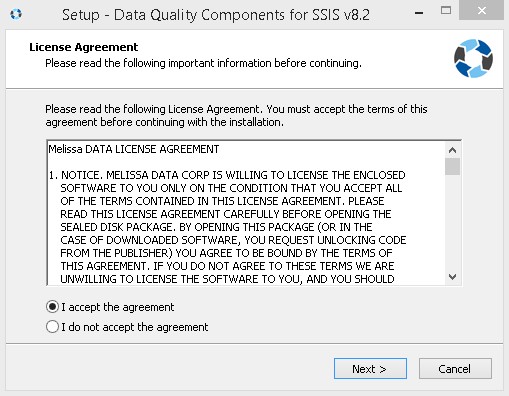

Once the installer appears, accept the agreement and click Next.

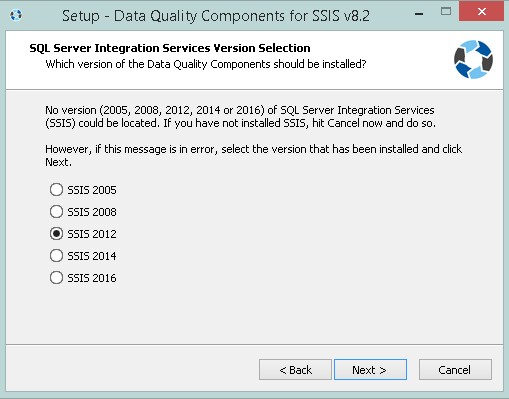

Select which version of SQL Server Integration Services is installed.

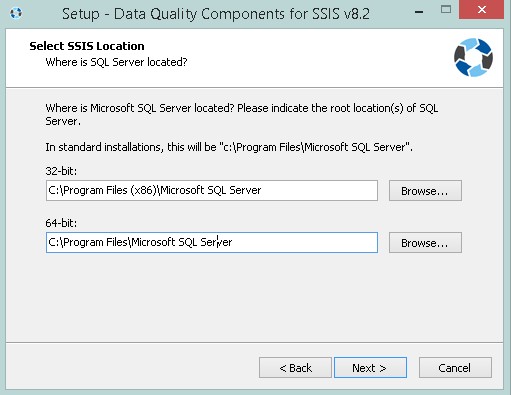

Select the SSIS root directory. Here you can modify where to install SSIS.

Enter your license string, and then select the components you want installed. The Melissa components will now be installed to the chosen directory.

SSIS Service Speed#

Melissa’s SSIS Enterprise level components can process hundreds of thousands of records per hour. SSIS is available in 2 processing modes, Cloud and On Premise, which will affect throughput speeds.

Major Factors#

Major factors impacting SSIS processing speed include:

Number of threads or processes

SSIS Cloud Processing components can handle multiple requests, increasing throughput speeds.

We recommend up to 10 threads for our Cloud APIs; if you require significantly more speed, contact your sales representative to discuss available options.

We recommend creating multiple instances of our on-premise components to increase speed, utilizing a Balanced Data Distributor component to send data to each instance.

Type of input method provided

Using Melissa Address Key (MAK) as input will process faster since MAK is a globally unique identifier

Using parsed data elements (such as AddressLine1, City, and State) instead of unstructured data such as Free Form inputs will result in faster processing speeds.

Quality of input data

Data of poor quality that requires additional input, corrections and standardization will reduce processing speed

Number of output columns

Requesting additional output columns will reduce processing speeds as more data is requested from our services

Premium Mode Processing

Phone and Email services offer Premium mode processing, which will reduce processing speeds due to performing live requests that provide higher accuracy data

Type of SSIS input source utilized

SSIS can use a variety of input sources available via SSIS Toolbox. We recommend OLE DB or Flat file source for faster processing

Data Flow Buffer Size - DefaultBufferMaxRows and DefaultBufferSize Properties

The buffer size controls the amount of rows transferred by the data flow. Specifications for buffer size vary due to environment and input source speed. Typically, a slow source input speed will require a smaller buffer size

Adding Options and Actions

Including additional options or actions from our services will reduce processing speeds as additional data is requested or additional logic is utilized

Utilizing SQL Server Agent/Command Prompt to run projects

Processing speed can increase by utilizing SQL Server Agent or by running the project via Command Prompt. Process quicker without the overhead of Visual Studio

On Premise Components#

Speeds will vary due to hardware differences; higher speeds may be achieved with multiple instances of on-premise components.

SSIS Component |

Throughput (records / hour) |

|---|---|

Contact Verify – Name, Address, Phone, Geo, Email (Express) |

500,000 – 600,000 |

Generalized Cleanser |

9,000,000 – 12,000,000 |

MatchUp (Basic Match Code) |

3,000,000 – 5,000,000 |

Profiler |

800,000 – 1,250,000 |

Cloud Processing Components#

SSIS Component |

Throughput (records / hour) |

|---|---|

Contact Verify – Name, Address, Phone, Geo, Email (Express) |

90,000 – 160,000 |

Contact Verify – Name, Address, Phone, Geo, Email (Premium) |

50,000 – 70,000 |

Personator |

|

SmartMover |

|

Business Coder |

|

Property |

|

Global Verify – Name, Address, Phone (Express), Email (Express) |

110,000 – 150,000 |

Global Verify – Name, Address, Phone (Premium), Email (Premium) |

60,000 – 85,000 |

IPLocator |

|

Personator World – Check – Name, Address, Phone (Express), Email (Express) |

Azure Data Factory (ADF)#

How do I deploy SSIS packages from Visual Studio for execution by SSIS-IR in ADF?#

This guide covers how to deploy an SSIS package that uses Azure SQL Server for both input and output. If you plan to use a different input/output method, the configuration steps will vary.

In this approach, the package is deployed to the SSISDB database on Azure SQL Server. You can then trigger its execution in Azure Data Factory by starting a pipeline that invokes the SSIS Integration Runtime (SSIS-IR).

Important

The SSIS package must be built for SQL Server 2017, as SSIS-IR in Azure Data Factory currently only supports SQL 2017 packages.

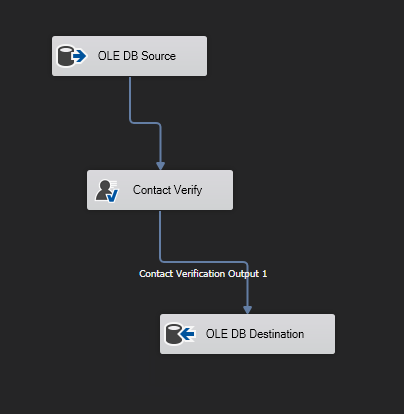

Step 1: Create a 2017 SSIS Package In Visual Studio With Input and Output being Azure SQL Server#

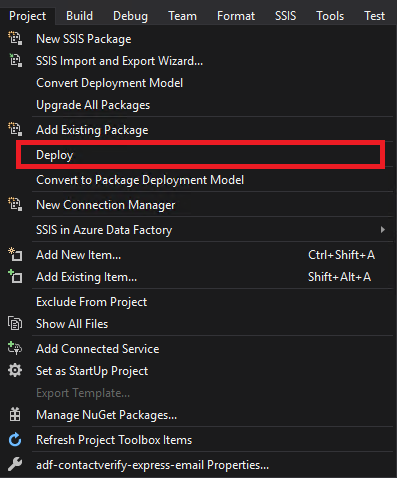

Step 2: Deploy the SSIS Package to Azure SQL Server#

Navigate to Project (top-left menu) → Click Deploy.

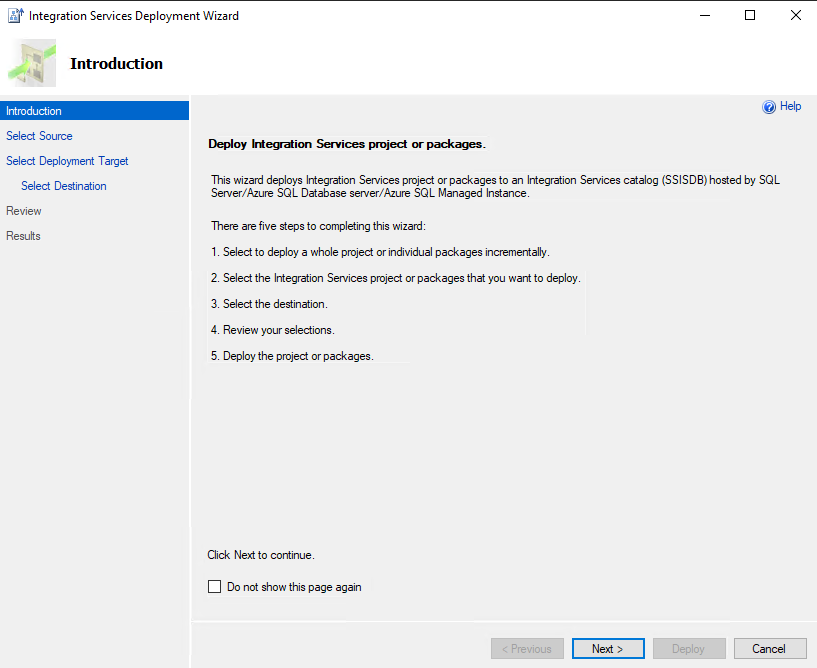

In the Integration Services Deployment Wizard, click Next.

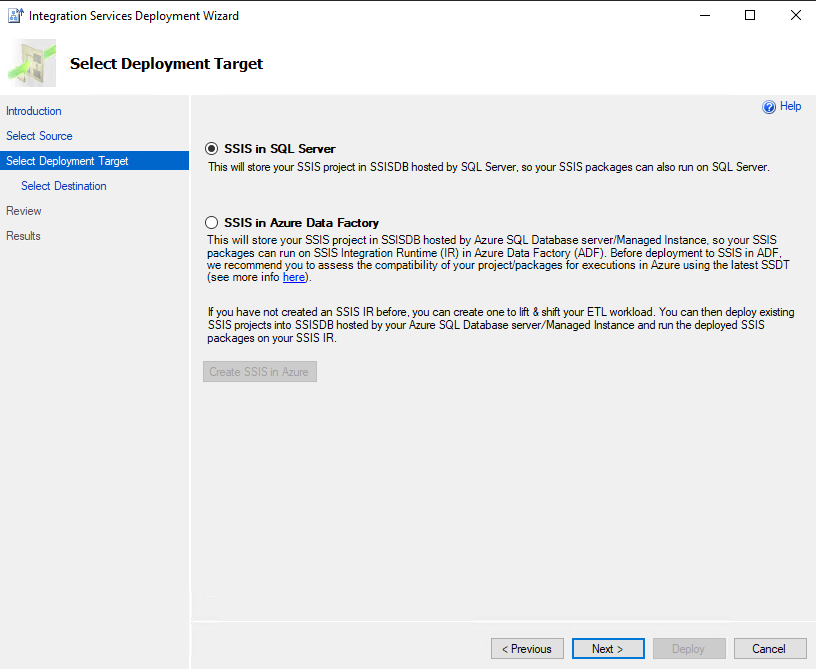

Under the Deployment Target tab:

Choose SSIS in SQL Server or SSIS in Azure Data Factory (both will deploy your package to the SSISDB of your Azure SQL Server).

Click Next.

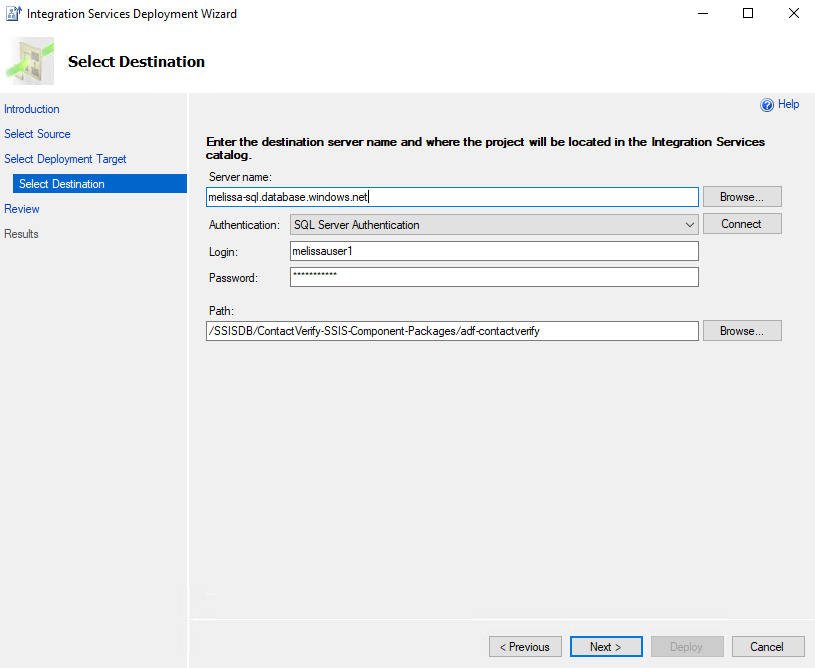

Under the Select Destination tab, enter the following details:

Server Name: Enter your Azure SQL Server name.

Authentication: Select SQL Server Authentication.

Login & Password: Use the credentials created when setting up the Azure SQL Server.

- Click Connect to verify the connection.

- If the connection is successful, the Path text box will become active:

Click Browse…

Under SSISDB, click New Folder… to create a subfolder for SSIS packages (unless you already have one that you would like to use).

Click OK, then Next.

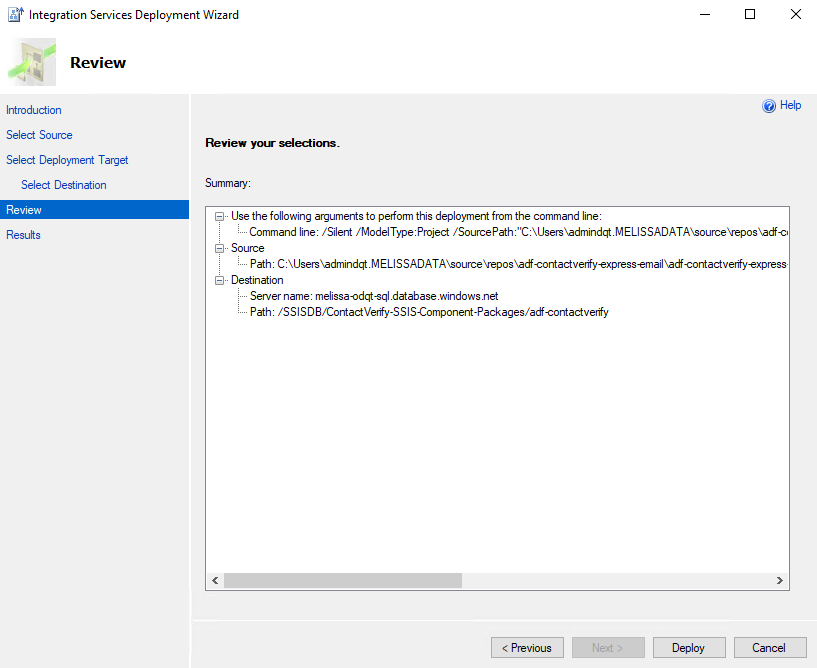

Under the Review tab:

Review the deployment details.

Click Next.

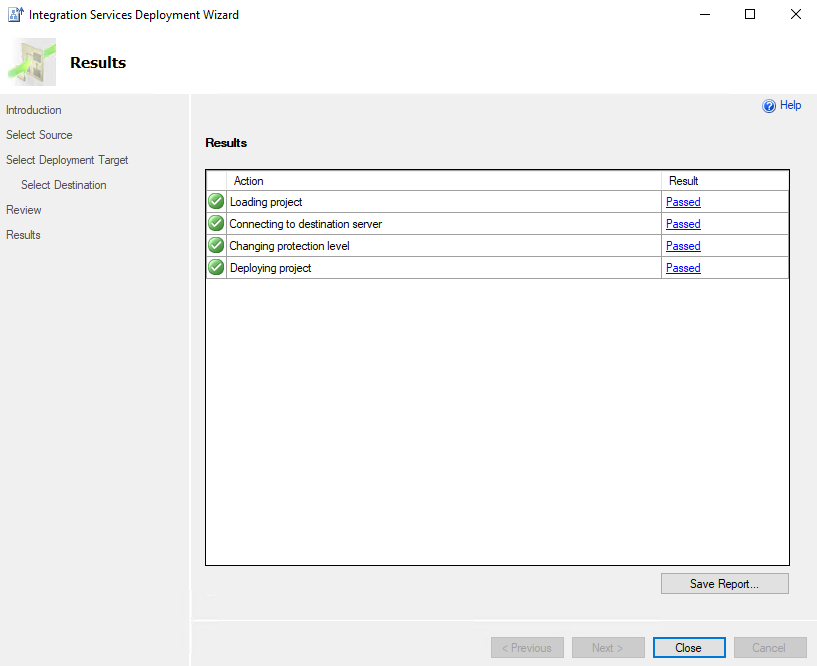

Under the Results tab:

If everything is configured correctly, a success message will confirm that your package has been deployed successfully.

Note

The SSIS Integration Runtime (SSIS-IR) instance linked to this Azure SQL Server must be running at the time of package deployment.

Step 3: Execute Package in Azure Data Factory (ADF) Environment#

Navigate to your Data Factory (V2) instance on the Azure Portal.

Click Launch Studio.

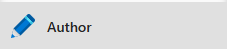

On the left-hand side, click Author.

Click Add New Resource → Pipeline → Pipeline.

In the search box, type

Execute SSIS Package, then drag and drop it into the pipeline.Rename the Pipeline and Execute SSIS Package as desired.

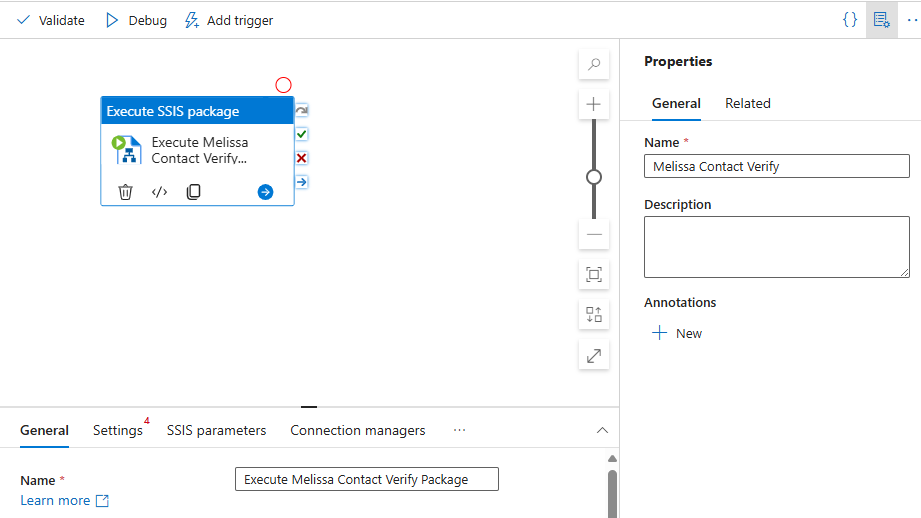

Configure the SSIS Package Execution. Go to the Settings tab:

Azure-SSIS IR: Select your SSIS-IR instance from the dropdown (Ensure it is active and running).

Package Location: Choose

SSISDBfrom the dropdown, then click Refresh to display all newly deployed packages.Folder - Project - Package: Select the path of your package. If you haven’t renamed the package file, it should default to

package.dtsx.

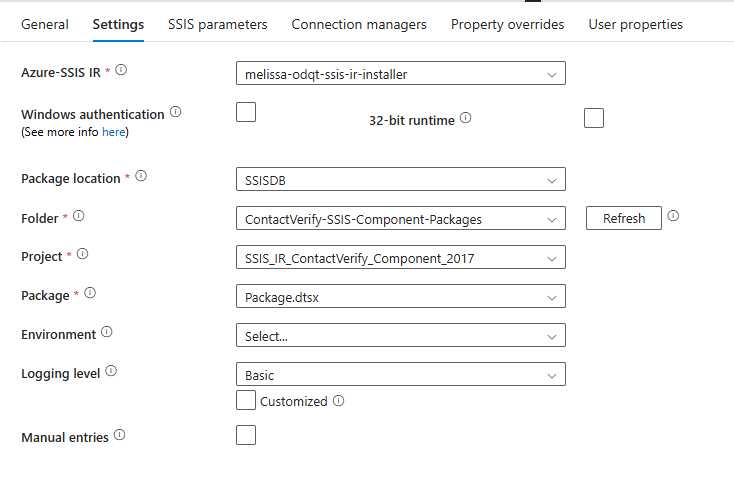

Click Debug.

You will be directed to the Output tab where you can track the execution status of your package. The status will indicate whether the package succeeds or fails.

Estimated Monthly Costs#

How much does it cost to run SSIS on Azure Data Factory? These are estimated monthly Azure costs for Melissa SSIS on an ADF deployment.

Note

Azure offers many different usage options and configurations for its services. The cost may vary significantly depending on the settings and region you select. For the most accurate and customized estimate, you can use the official Azure Pricing Calculator.

Azure SQL Database (General Purpose, 2 vCores)#

Used to store:

SSIS packages (in SSISDB)

Input/output data tables

Breakdown:

- Compute:

2 vCores × $0.508/hr × 730 hrs = $370.84 / month

- Storage:

32 GB data × $0.115/GB = $3.68

9.6 GB log × $0.115/GB = $1.10

→ Total storage = $4.78 / month

Total Estimated Monthly Cost: $373

Azure Data Factory – SSIS Integration Runtime (SSIS-IR)#

Configuration:

Integration Runtime Type: SQL Server Integration Services (SSIS-IR)

Tier: Standard

Instance: A8 v2 (8 vCores, 16 GB RAM, 80 GB disk)

Rate: $0.9448 per hour

Runtime: 160 hours/month (e.g., 8 hrs/day × 5 days/week)

Azure Hybrid Benefit: Enabled (uses your own SQL Server licenses)

Support Plan: Basic (Included)

Licensing Program: Microsoft Customer Agreement (MCA)

Total Estimated Monthly Cost: $151.16

Note

Keeping SSIS-IR running 24/7 would increase this cost significantly.

Azure Blob Storage (East US)#

Used to store setup scripts, installer files, and (optionally) data files.

Configuration:

Account Type: Block Blob Storage

Performance: Standard

Access Tier: Hot

Redundancy: Locally Redundant Storage (LRS)

Capacity: 1,000 GB

File Structure: Flat Namespace

Costs:

Storage: 1,000 GB × $0.0208/GB = $20.80

- Operations:

Write Operations: 100,000 ops × $0.0005 = $0.50

List/Create Container: 100,000 ops × $0.0005 = $0.50

Read Operations: 100,000 ops × $0.0004 = $0.04

Other Operations: 10,000 ops × $0.0004 = $0.01

Data Retrieval: No cost for Hot tier

Data Write: No cost for Hot tier

Total Estimated Monthly Cost: $21.84

Costs Summary#

This is a summary of the approximate monthly totals.

Component |

Estimated Monthly Cost |

|---|---|

Azure SQL Database |

$373.00 |

SSIS Integration Runtime |

$151.16 |

Azure Blob Storage |

$21.84 |

Azure File Share (Standard) |

$2.03 (if applicable) |

Azure File Share (Premium) |

$24.00 (if applicable) |